October 10, 2025

Throughout my data science journey, I’ve come across countless resources — from courses to books — that start by outlining a process for running a successful data project.

While the frameworks often differ by name, their core steps are remarkably similar.

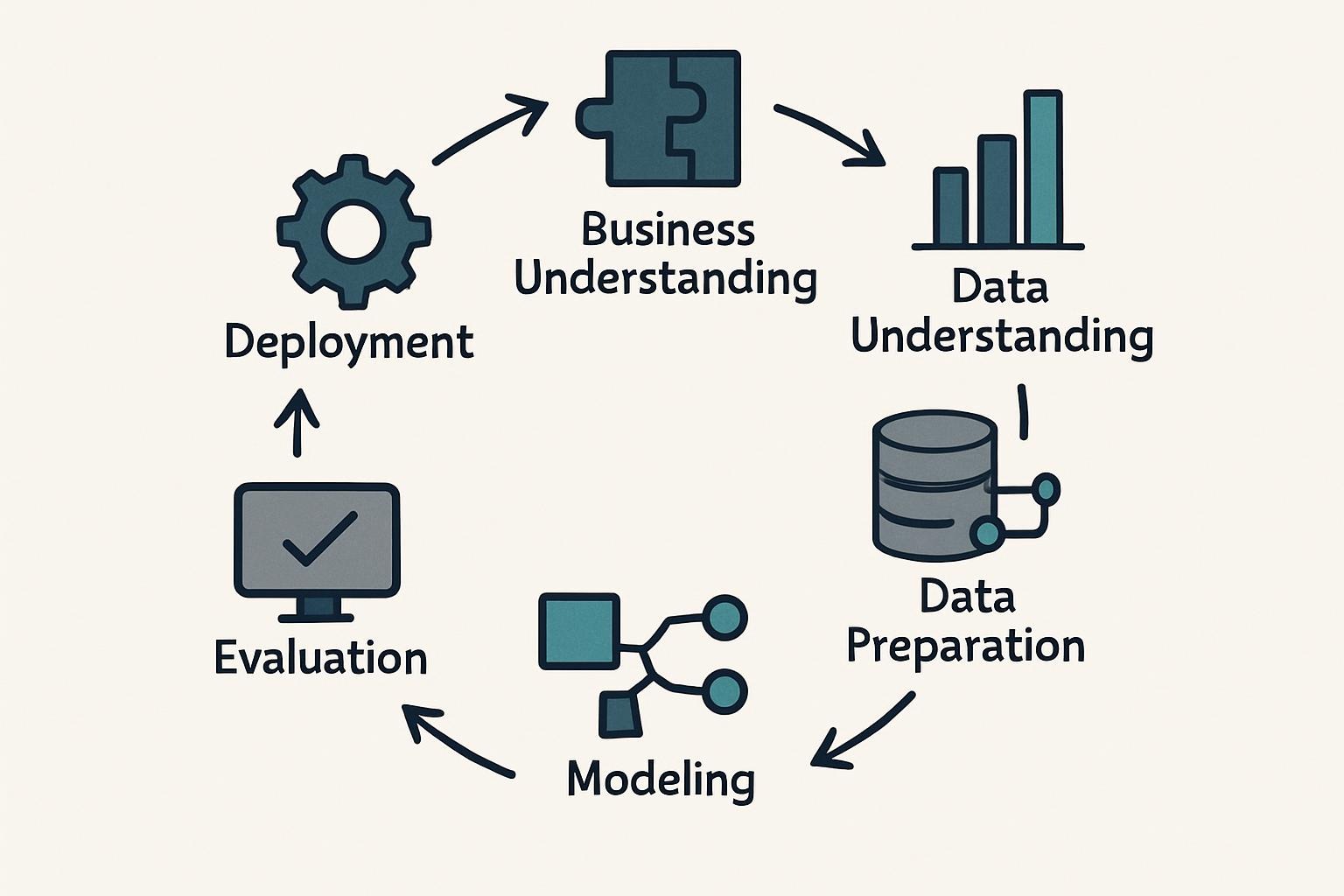

In this post, I want to share the most widely recognized framework used across industries to structure and guide data projects: the Cross-Industry Standard Process for Data Mining, better known as CRISP-DM.

The Framework

CRISP-DM breaks a data project into six stages — each one building on the last to move an idea from business problem to actionable insight.

1. Business Understanding

Every data project starts with understanding the core objective — what problem is being solved and why it matters.

This is the stage where you clarify the business goal, define success metrics, and outline constraints like time, budget, and resources.

It’s also the time to develop a clear project plan and identify potential risks. The goal is to ensure that everyone — data scientists and business stakeholders alike — share a common understanding of what success looks like.

2. Data Understanding

Next comes exploring and assessing the data available.

In this phase, the project team gathers the initial data, examines its structure, and evaluates its quality and relevance to the business objective.

It’s important to verify whether the data truly measures what matters — identifying gaps, biases, or limitations early saves significant rework later.

3. Data Preparation

Once the data has been validated, it needs to be transformed into a format suitable for analysis.

This phase involves cleaning, combining, and restructuring the data to create a reliable dataset ready for modeling.

Think of this as the behind-the-scenes work that determines whether your model will succeed — poor data preparation almost always leads to weak results.

4. Modeling

This is often seen as the most exciting stage — where the data finally meets the algorithms.

Here, you select the right modeling technique based on the project’s needs.

For example, if interpretability is key, a regression model may be more suitable than a complex black-box approach. The key question is always:

What’s the best balance between accuracy and usability?

5. Evaluation

Before a model can be deployed, it must be rigorously evaluated.

This phase ensures the model’s predictions are accurate, reliable, and aligned with the success criteria defined earlier in the project.

Model evaluation is often iterative — teams cycle between modeling and evaluation until results are satisfactory. The focus is on validating the business relevance of the insights, not just their statistical accuracy.

6. Deployment

Finally, the model (or analytical output) is integrated into real-world operations.

This could mean deploying a predictive model into a production system, embedding insights into a dashboard, or sharing findings in a report.

But deployment isn’t the end — models require ongoing monitoring to ensure their performance doesn’t degrade over time. Continuous evaluation keeps predictions accurate and unbiased.

Conclusion

The CRISP-DM framework provides a structured roadmap for moving any data project forward — from defining the business problem to delivering measurable value.

While I’ve used a machine learning example here, these steps apply equally to other types of analytics work, whether it’s dashboard development or exploratory reporting.

It can also be helpful to view CRISP-DM through four broader lenses:

- Business Context

- Data Engineering

- Analytical Modeling

- Implementation

Keeping this structure in mind will help ensure your projects stay aligned, efficient, and impactful.

Good luck turning data into value for your organization!